RESEARCH PROJECTS :

These are the projects which I undertook as a graduate research assistant at the ASCC lab :

- Human-Robot Collaborative Manipulation

- Robot Learning from Demonstrations

- Role of physiological signals (EEG, EMG) in human-robot interaction

COURSE PROJECTS:

Apart from my research projects, I have also found course projects to be very helpful in cementing theoretical ideas and understanding their application to real world problems.

- Embedded Sensing and Computing - Control of Mobile Robot using PDA

- Stochastic Systems - Exploiting Spatially dependency of SIFT Features for image fusion

- Mobile Robotics - Improving the tele-presence capability of the Rovio mobile robot

- Computer Vision

- Pattern Recognition

- Artificial Intelligence

Research Projects

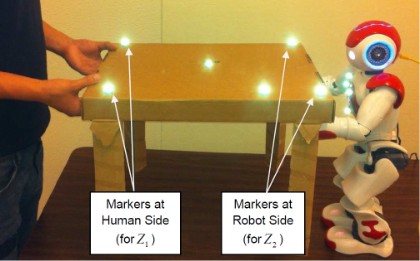

1. Human Robot Co-operative Manipulation

Performing co-operative manipulation tasks is one of the most basic physical human-robot interaction task. Manipulating a table collaboratively is a typical example.

Traditionally, the role of the collaborating robot is fixed to a simple follower. In this research, we investigate how estimating and predicting human motion can be used by the robot to determine its role. The extended Kalman filter is used to predict the human motion as well as generate a confidence of its prediction.

For complete details about this work, please refer my IROS'11 paper.

Results are shown in the following figure and video.

The video compares the cases where the robot's behavior is entirely reactive versus the case in which the robot has both proactive and reactive behaviors . It can be clearly seen that the performance is much better in the latter case.

The figure shows the trajectories of the human and the robot 'ends' of the table for the two cases.

Clearly, improvement is observed with the use of the system as compared to the simple follower/reactive approach.

2. Robot Learning from Demonstration

a. Imitation of Hand Gestures

Robots are coming into our lives to assist us with day-to-day activities. An important issue is to program them. Since they are expected to be handled by non robotics experts too, we need a simple, convenient, intuitive and natural way to program them. Traditional explicit programming techniques will not work. Hence, the solution is to program or teach the robots by demonstration just like human beings teach each other.

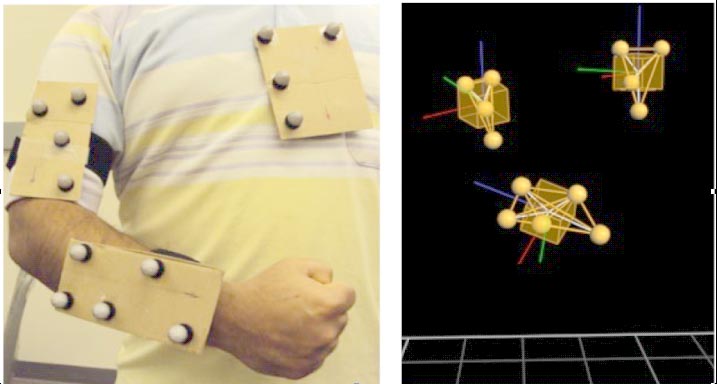

Joint angles of the human arm can be obtained using the motion capture system

There is bound to be temporal incoherence between the multiple demonstrations due to the varying pace of performing the gesture. Hence, we must temporally align the signals. Dynamic Time Warping gives a non linear mapping between two signals so that the signals are optimally aligned. A weighted average is taken to find a mean trajectory which the robot uses to imitate the hand gesture. Further technical details may be found in my ICIA'10 paper.

The following video shows the results of this work.

b. Imitation of Gestures in Presence of Missing Data

Although the DTW algorithm works well for gesture imitation, it cannot handle cases where the acquired trajectories contain missing data. In this work, we remedy the situation by proposing two modifications to the imitation system . The modified block diagrams are shown below

We propose two approaches such as the Interpolation approach and the Modified DTW approach. It was found

that the Modified DTW strategy works better for trajectories that

contain large amount of missing data. However for trajectories that have

small patches of missing data are generalized well by using the

interpolation approach. The details are provided in the HUMANOIDS'10 paper.

The results are shown in the following figure

Figure shows the alignment results obtained by using the proposed methods and the final generalized trajectory results

c. Task-level Imitation - Learning to reach out and hold a table

The previous works focused on trajectory level imitation. For learning from demonstration to be usable at a higher level of abstraction, the robot should also be able to imitate the task by extracting relevant task constraints from the demonstrations.

In this work, the robot learns a simple table grasping task, by observing the movement of human's hand with respect to the object of interest (table). The experimental setup is shown below.

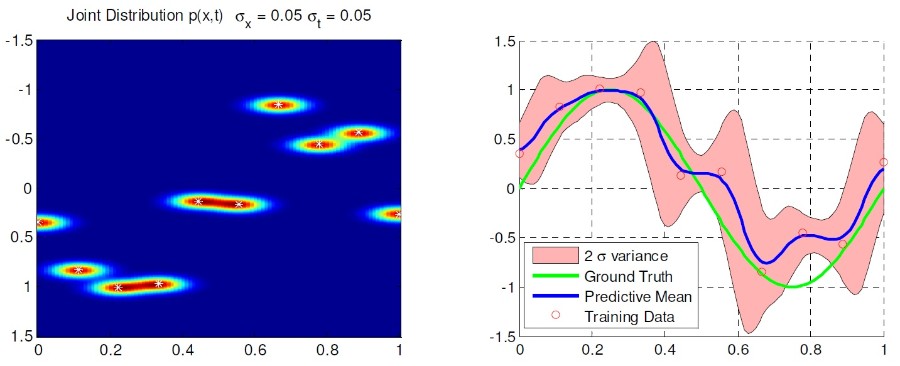

We use the GMM/GMR framework for generalizing from multiple demonstrations. The results are shown below.

Read more about this project in my IJHR paper.

3. Role of Physiological Signals in Human - Robot Interaction

The ability to read physiological signals such as EEG and EMG give the robots a unique capability to robots, which is not available for humans. This spans out an interesting field for human-robot interaction which is beyond simple audio-visual interaction. We investigate the use of such signals for human-robot interaction.

a. EEG based humanoid robot control

One of the simplest applications of the EEG signal could be to teleoperate a robot. The focus of this work was to develop a wearable computing system which consists of an EEG acquisition device (Emotiv Epoc), a PC and a head-mounted display. The setup is shown below.

The software platform developed is shown below

Software Architecture

The results are shown in the following videos :

b. Using EMG signals for Human-Robot interaction

In the co-operative manipulation set-up, the humanoid robot relies on human arm motion for prediction and role selection.

It is found that EMG signal (muscle activation) precedes the actual mechanical motion by about 80ms. Hence, this time can be used by the robot to cancel out its processing and actuation delays, thus providing natural interaction.

Currently, work is under progress. A data-acquisition system using the TI ADS 1298 is being developed.

Preliminary experiments for classifying hand gestures have shown success.

Academic Projects

Academic projects has helped me to understand the concepts learned in class and relate them to real-world problems. Additionally I am always on the lookout to connect these projects with my research work.

1. Embedded Sensing and Computing (ECEN 5060) (Fall-2009)

Controlling a mobile robot (Pioneer 3-DX) using a PDA (windows mobile 6.5).

We used the .NET compact framework for the GUI development. C# was used for writing the code for networking. An infrastructure based network was used for communication. The most challenging part of the project was to stream the video from the robot to the PDA device. At QVGA resolution we could get about 5 fps.

Setup

GUI - Right and Bottom Slider bars control the speed, steering of the robot. Left and Top sliders control pan-tilt of the camera

2. Stochastic Systems (ECEN5513) (Fall-2009)

Exploiting spatial dependency of features for efficient Image Stitching

In traditional image stitching techniques, most successful approaches are based on feature based techniques. However, most of these approaches do not consider the fact that the features to be matched lie in almost the same neighborhood in features in both images.

For this project, we used SIFT features and matching them. Spatially independent features are removed. Further, outliers removed using RANSAC and a corresponding homogenous transformation is obtained.

3. Mobile Robotics (ECEN 5060) (Spring-2010)

We modified the EEG controlled humanoid robot to a control a Rovio mobile robot instead.

4. Computer Vision (ECEN 5283) (Spring-2010)

There were several mini-projects.

Project 1 - Geometric Camera Calibration

Application - Augmented Reality

Project 2 - Edge detection (canny, matched filter )

Application - Retinal blood vessel extraction

Project 3 - Laplacian and Gabor Filters

Application - Texture Classification

Project 4 - Clustering using K-means and Expectation Maximization

Application - Texture-based Image segmentation using features extracted from Gabor Filters and clustered using K-means and Expectation Maximization

Project 5 - Jump Diffusion Markov Chain Monte - Carlo (MCMC)

Application - Target tracking

Project 6 - Feature extraction and selection

Application - Face recognition using PCA and classification based on Euclidean Distance

5. Pattern Recognition (ECEN 5060) (Fall-2010)

Project 1 - Least Squares Curve Fitting with Regularization

Bayesian Curve fitting

Project 2 - Parameter Estimation using Bayesian techniques (with Conjugate Priors)

Project 3 - Regularized linear regression and Bayesian Regression with gaussian priors

Project 4 - Classification using Least Squares, Fisher Discriminants and Generative Models (GMM)

Project 5 - Kernel methods

Nadaraya-Watson, Gaussian Process Regression and Classification

Classification using Gaussian Process

6. Artificial Intelligence (CS 5793) (Spring-2011)

Project 1 - STRIPS planner

Project 2 : Inference on Bayes nets using enumeration, rejection sampling and likelihood weighting

Project 3 : Optical flow using neigborhood search.

7. Bachelors Project (University of Pune) (Fall 2008, Spring 2009)

We had designed an offline handwritten signature recognition system using a feed-forward multilayer perceptron network. The system was tested on the GPDS signature database.